Predicting the Failing Test

Part 2 of Predictive Test-Driven Development: After defining the new behavior, we now write a test and make sure it fails in the expected way through prediction.

Originally published on . Last updated on .

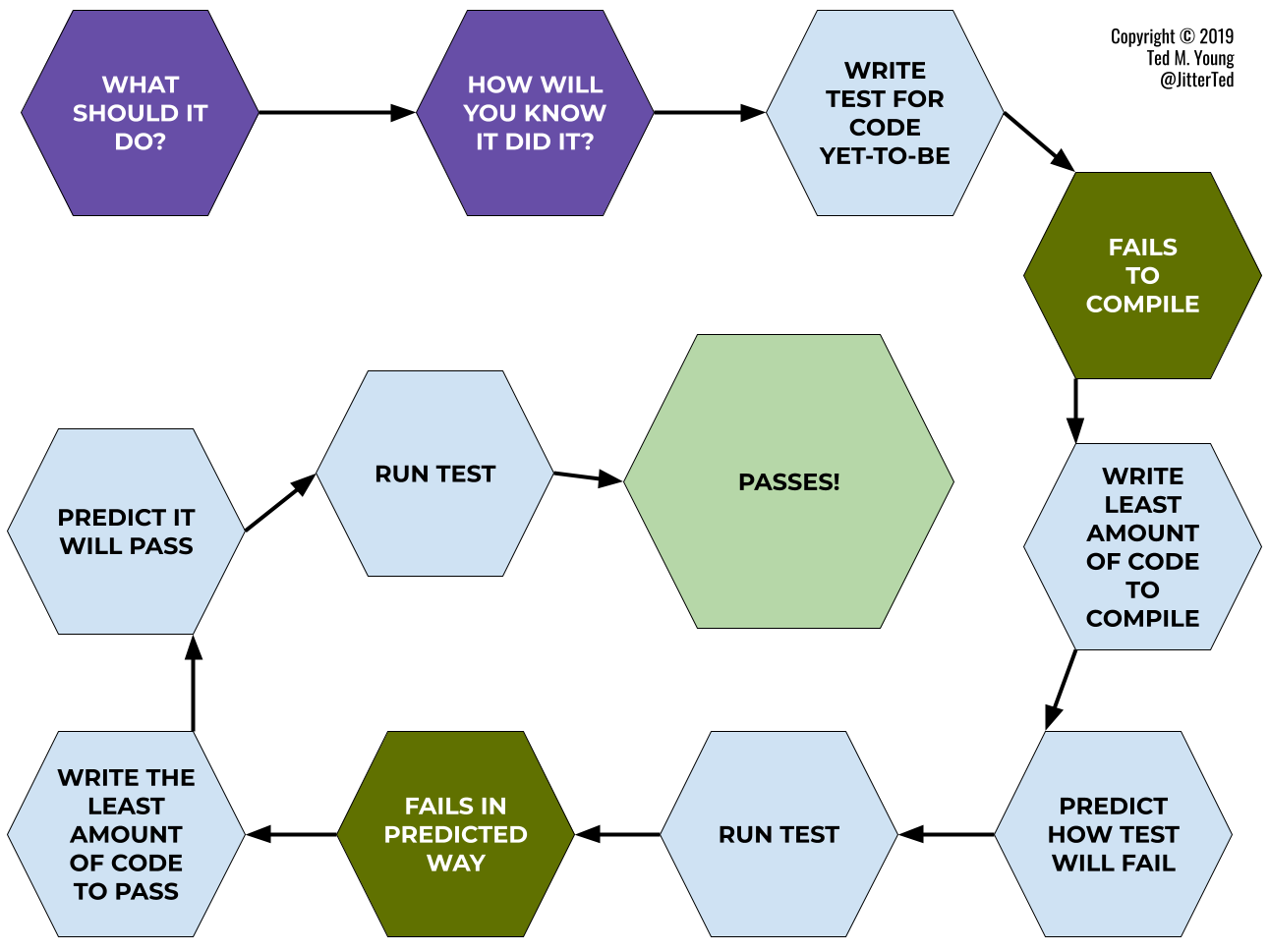

Way back in March 2021, I started explaining my Predictive Test-Driven Development process. The whole process looks like this:

In part 1, we looked at the beginning of the process, answering the questions:

-

What should it do? and

-

How will you know it did it?

These questions help clarify our goal for the next bit of behavior change, i.e., what we want our system to do that it currently doesn’t. We need this clarity to take the next step: writing a failing test.

The Failing Test

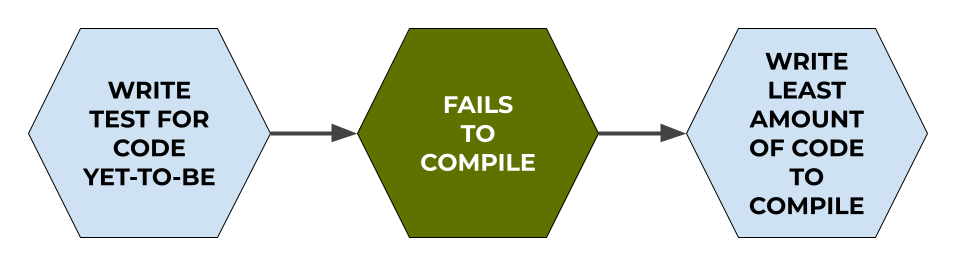

Our next major goal is to create a test and watch it fail. These next three steps in the process get us closer:

-

Write Test for Code Yet-to-Be: this reminds us that we’re writing test code for behavior that doesn’t yet exist. Or, it’s different from what exists now, such as a bug.

-

Fails to Compile: when testing new behavior, we may need to create a new method, or even a new class.

-

Write Least Amount of Code to Compile: for any non-existent methods or classes, our job here is to only create them, not implement them. Treat this like a game: how little code can you write to get the code to compile?

Let’s look at the example from the domain of a single-player version of the Blackjack card game from Part 1:

Given a PLAYER with a HAND containing 2 CARDs,

When the PLAYER DRAWs a CARD from the DECK,

Then the HAND should have 3 CARDs.

The new behavior here is having the player draw (take) a card from the deck[1]. Here’s a JUnit test that implements our example:

copy@Test public void playerWith2CardsDrawsCardThenHas3Cards() { Game game = new Game(); game.playerDrawCardFromDeck(); assertThat(game.playerCards()) .hasSize(3); }

At this point, the method playerDrawCardFromDeck()[2] does not yet exist, so it doesn’t compile (and shows as red in my development environment). Now we do the minimum work possible to get the code to compile. We do that by creating the missing method in the Game class:

copypublic void playerDrawCardFromDeck() { }

Notice it’s empty. We don’t implement anything yet, we write just enough code to compile so we can watch the test (hopefully!) fail. Next, let’s run the test.

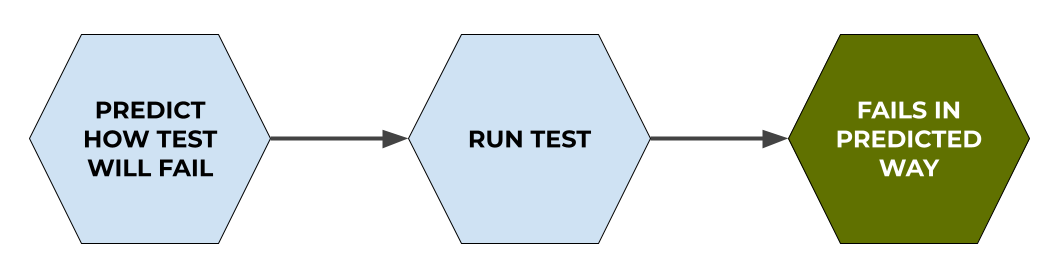

Predict How the Test Fails

Stop! Don’t run that test yet!

It’s tempting to go from getting the test to compile to running it and watching it fail, but you’d be missing an important part: prediction.

For many years, I happily did TDD without predicting how the test would fail, and was successful at it. At some point, I noticed a common mistake: assuming the failing test failed for the expected reason: the yet-to-be-written production code. What if it failed for a different reason? What if it failed because you misunderstood the existing code? Or the test setup (the “when”) was incorrect? If you assumed the test failed because of missing behavior, you’d be confused when the test continued to fail even after implementing that behavior.

In our example, we expect the test to fail because playerDrawCardFromDeck() does nothing. That should mean the number of cards the player has is two and not three. Let’s run the test with that prediction, i.e., the assertion will fail because our expected result of 3 is not the actual result of 2.

![Test failed with: java.lang.AssertionError: Expected size: 3 but was: 0 in: []](/assets/unexpected-assertion-failure.png)

Uh oh. That’s not what we predicted (expected). The test is failing (yay!), but for the wrong reason (boo!). Why does the player have 0 cards? Let’s look at our test again:

copy@Test public void playerWith2CardsDrawsCardThenHas3Cards() { Game game = new Game(); game.playerDrawCardFromDeck(); assertThat(game.playerCards()) .hasSize(3); }

Oops. We forgot to tell the Game to do the initial deal, which deals two cards each to the player and dealer. Let’s fix that:

copy@Test public void playerWith2CardsDrawsCardThenHas3Cards() { Game game = new Game(); + game.initialDeal(); game.playerDrawCardFromDeck(); assertThat(game.playerCards()) .hasSize(3); }

Let’s run the test again – but wait! Let’s repeat our prediction: we will expect 3, but the actual result will be 2.

java.lang.IndexOutOfBoundsException: Index 0 out of bounds for length 0 at java.base/java.util.ArrayList.remove(ArrayList.java:536) at com.jitterted.ebp.blackjack.domain.Deck.draw(Deck.java:24) at com.jitterted.ebp.blackjack.domain.Hand.drawFrom(Hand.java:45) at com.jitterted.ebp.blackjack.domain.Game.dealRoundOfCards(Game.java:71) at com.jitterted.ebp.blackjack.domain.Game.initialDeal(Game.java:64)

Oh no, that’s even worse. What’s going on?

A closer look at initialDeal() reveals that we had some code leftover from an experiment and forgot to remove it. And we forgot to run all of the tests before we started this session. Even us “experts” make silly mistakes like this[3]. However, our prediction prepared us to be on the lookout for anything out of the ordinary.

Let’s clean up initialDeal(), and, before we run the tests, we repeat our prediction so it’s fresh in our mind: we expect a size of 3, but the actual size will be 2.

java.lang.AssertionError:

Expected size: 3 but was: 2 in:

[Card {suit=CLUBS, rank=A}, Card {suit=CLUBS, rank=5}]

Success! Now it’s failing for the correct reason. It’s still failing, but at this point of the TDD process, we want it to fail[4]. And, just as important, we want it to fail due to the missing behavior, and not some other reason.

Prediction Prepares Us for Surprises

Over the years, I’ve found this extra prediction step invaluable in fighting the tendency to make assumptions[5]. By stating our prediction before we run the test, we are better prepared to notice any slight difference. I often state the prediction out loud, because it’s easy to lie to ourselves by thinking “oh yeah, that’s what I thought would happen” when we predict only in our heads.

Assumptions lead us astray so easily, and are frustrating because we’re trying to solve what we think is the problem instead of the actual problem. The prediction step won’t prevent all wrong assumptions, but it eliminates enough of them to make it well worth the effort.

Next Up

In the next part, we can finally write some production code! Stay tuned. If you have questions or want to discuss PTDD, join my free Discord community.

The TDD Intro Series

- Red-Green or Refactoring First?

- Clarifying the Goal of Behavior Change

- Predicting the Failing Test (this article)

- Implementing the Feature

- Tightening Our Assertions

In the real world, players don’t take cards, they signal the dealer that they want another card. This is called “hit” in the language of Blackjack. Object-oriented programming doesn’t always mean simulating the real world as-is. ↩︎

You might wonder where the

Deckis. In this example, theGamealready has a reference to aDeck. ↩︎If you want to see me make more mistakes, just watch me live code on Twitch. ↩︎

And so even though the test is failing, it’s actually a success. This is why the diagram shows this step in green and not red! ↩︎

There’s also research to back this up. See this study: Predicting as a learning strategy. ↩︎